Project Experience

I am passionate about exploring various robotic platforms and concepts, continually seeking to develop intriguing projects that align with my interests and aspirations. Through hands-on experience and a creative problem-solving mindset, I aim to make meaningful contributions that benefit society.

-

-

Simulation

Simulation

-

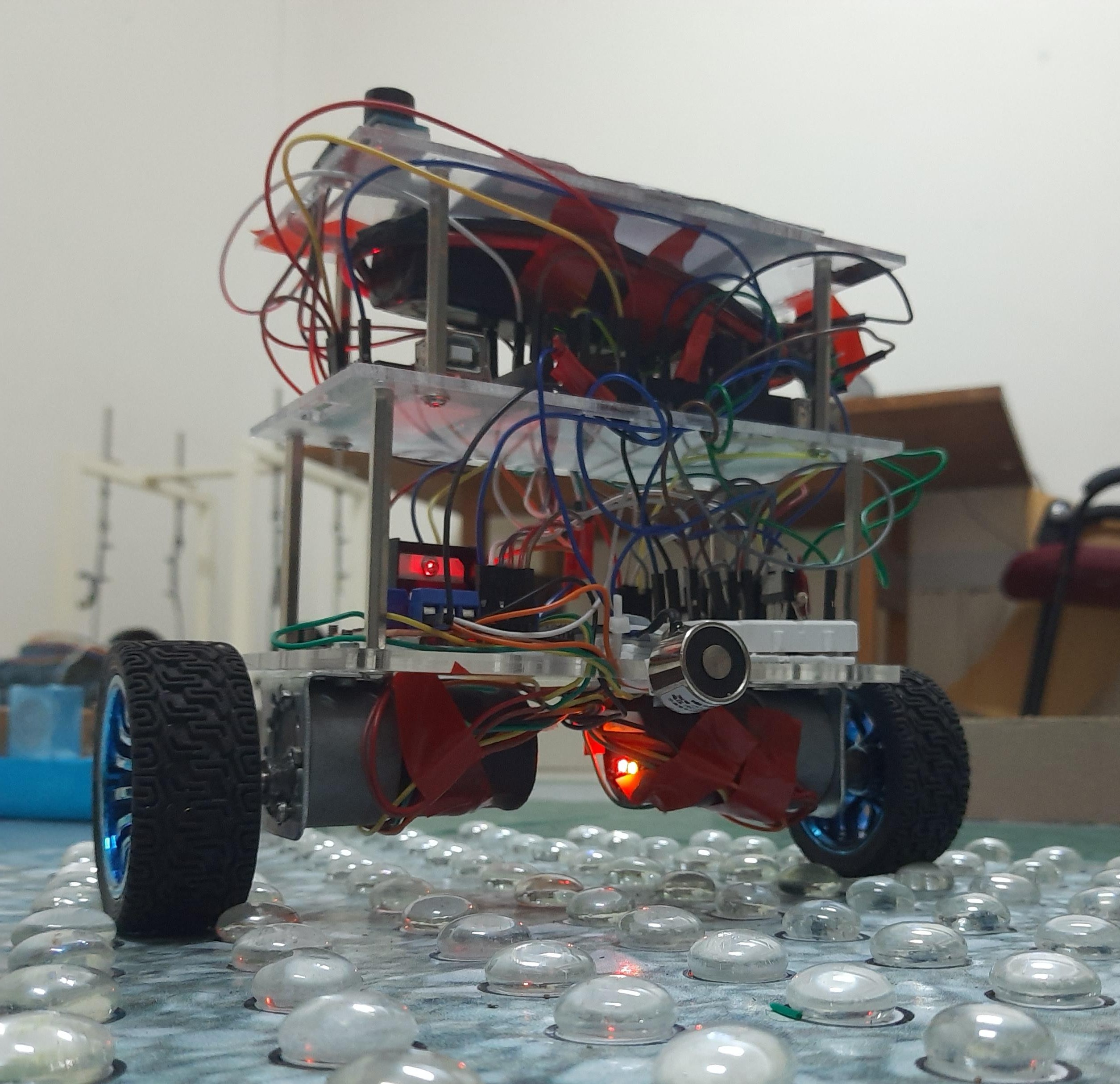

Hardware

Hardware

-

-

-

VIO_Mapping_and_Localization

VIO_Mapping_and_Localization

-

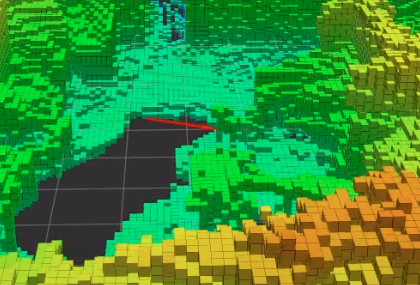

Lidar_Mapping_and_Localization

Lidar_Mapping_and_Localization

-

-

PuSHR_Perception

PuSHR_Perception

-

-

xArm_Planning_and_Avoidance

xArm_Planning_and_Avoidance

-

-

VIKRAM_Playlist

VIKRAM_Playlist

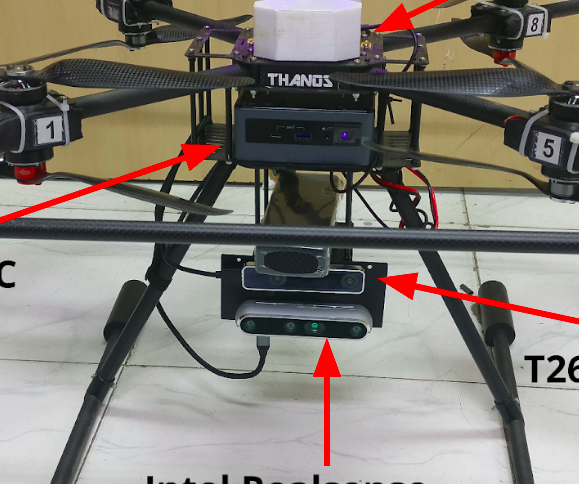

Vikram_Design

Vikram_Design Vikram_Control

Vikram_Control Vikram_Manipulation

Vikram_Manipulation Vikram_Navigation

Vikram_Navigation-

-

e_Yantra_Competition

e_Yantra_Competition

Positon_Hold

Positon_Hold Follow_Line

Follow_Line Traverse_Bridges

Traverse_Bridges-

-

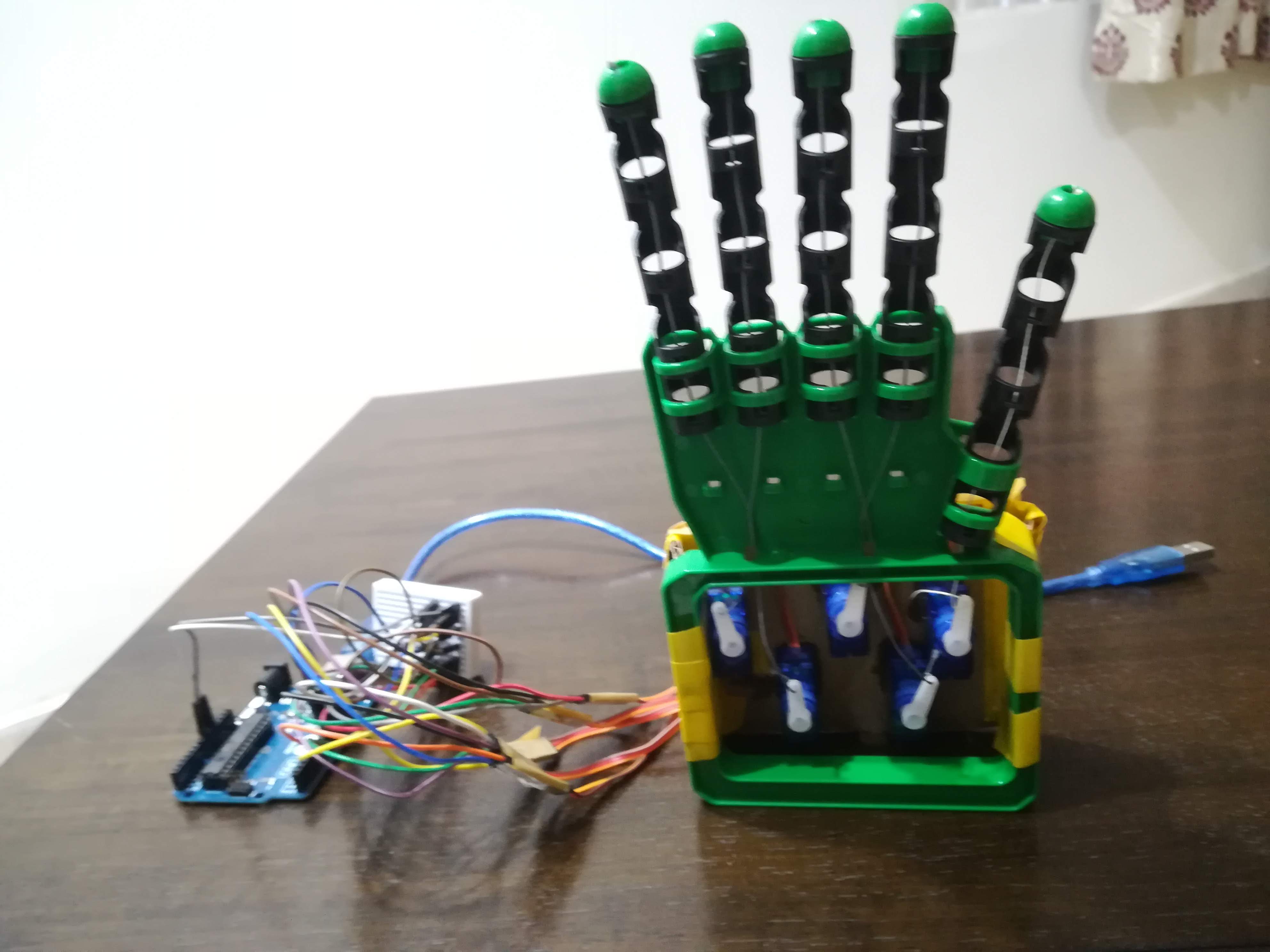

Robotic_Arm_Imitation

Robotic_Arm_Imitation

-

-

-

-

-

-