Course Projects and Experience

University of Michigan : Master of Science in Robotics - Ann, Arbor, USA

Courses : Mathematics for Robotics, Robotics Systems Lab, Mobile Robotics, Computer Vision, Robot Algorithms, Robot Manipulation.

BMS College of Engineering : Bachelor of Engineering in Electrical and Electronics - Bengaluru, India

Courses : Computer Vision, Machine Learning, Linear Algebra, Control Systems, Digital Signal Processing, Circuit Analysis

-

-

Mapping_Recording

Mapping_Recording

-

SLAM_Recording

SLAM_Recording

-

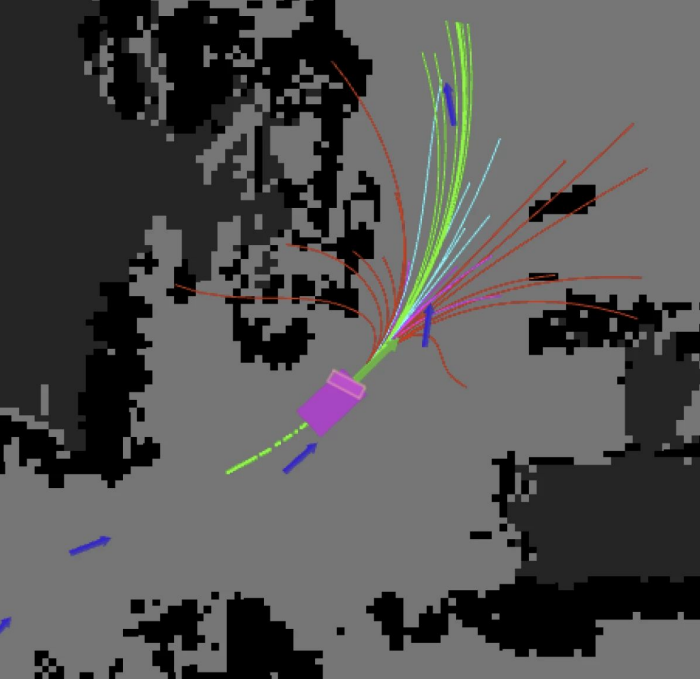

A-star_Planner

A-star_Planner RRT_Planner

RRT_Planner Point_Cloud_Registration

Point_Cloud_Registration-

-

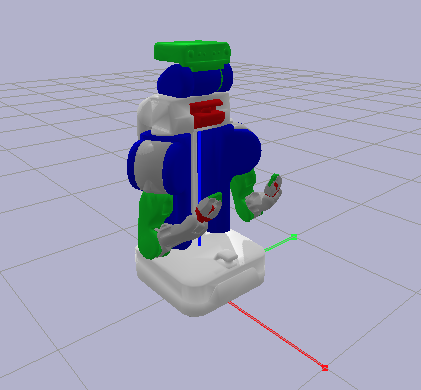

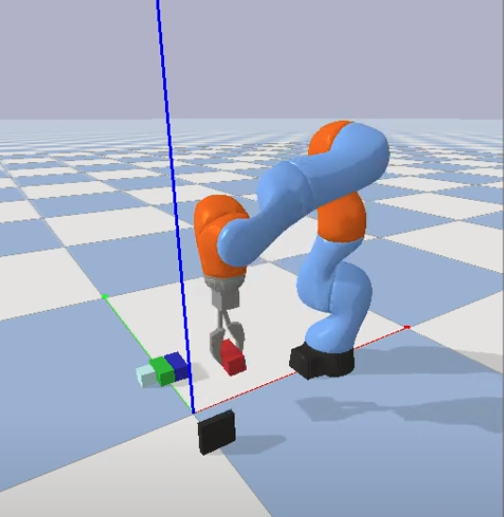

Autonomous_Manipulation

Autonomous_Manipulation

Kinematics

Kinematics Vision

Vision Stacking

Stacking-

-

Autonomous_Navigation

Autonomous_Navigation

-

-

Presentation

Presentation

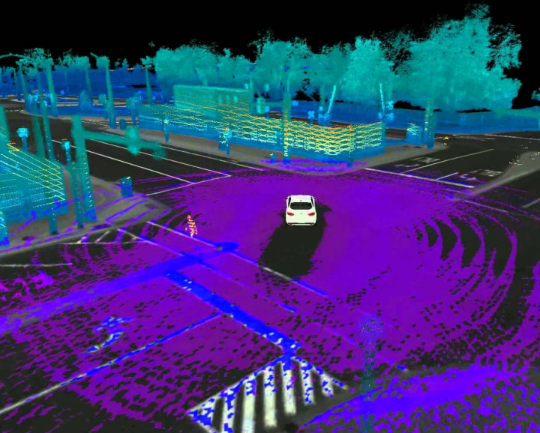

Liosam_Pointcloud_Detection

Liosam_Pointcloud_Detection Dynamic_Feature_Removal

Dynamic_Feature_Removal Masked_Liosam_Birdeye

Masked_Liosam_Birdeye-

-

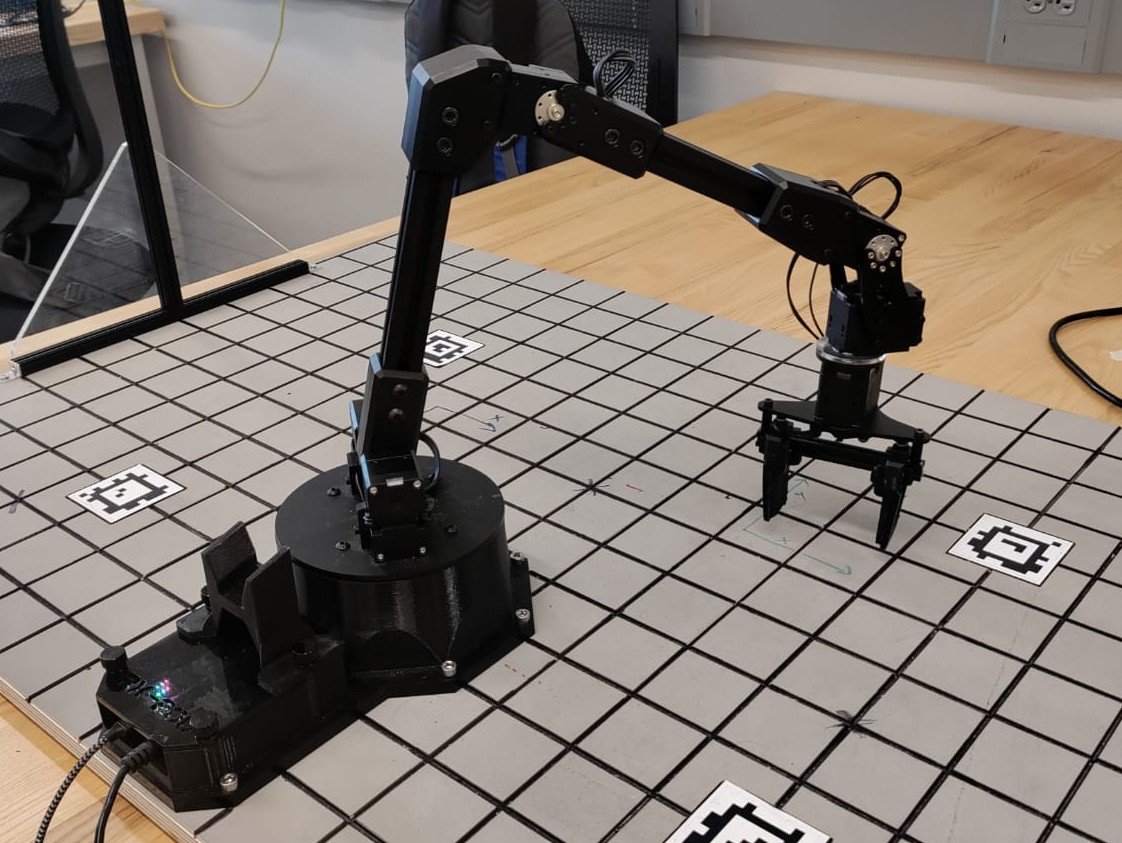

Push_and_Grasp_Manipulator

Push_and_Grasp_Manipulator

-

-

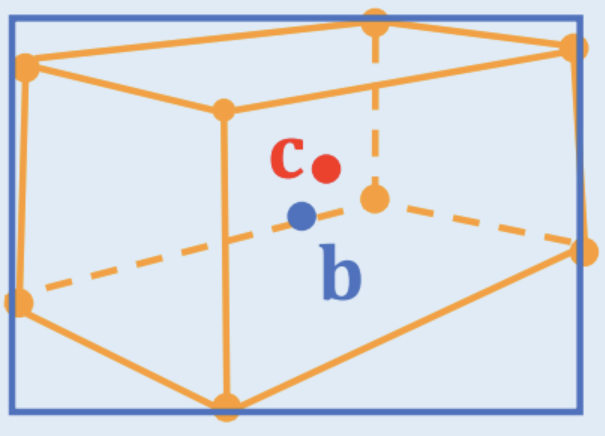

2D_to_3D_Estimation

2D_to_3D_Estimation

-

-

-

-

-

-